Now you can navigate to on your machine and access your notebook from there. To launch a Spark standalone cluster with the launch scripts, you should create a file called conf/workers in your Spark directory, which must contain the hostnames of all the machines where you intend to start Spark workers, one per line. # NOTE2: YY is the orion machine you started pyspark on (can be any machine you want) # NOTE1: You need ProxyJump set up for the above to work # Then, in another terminal on your local machine: # Here, 'orion12' refers to the location of the master node.

Install apache spark standalone driver#

You can then forward this to your local machine: # Run this to start the driver (ideally in tmux, screen, etc.) The configuration above starts the Jupyter notebook server on port XX075. One nice alternative to Jupyter would be ipython. Note that if you’d prefer to just use the Python shell, you can leave out the PYSPARK_DRIVER_PYTHON lines. Remember to source your shell configuration files again for the changes to take effect. # - NOTE: Replace XX with your port range prefix. # (You only need this once if it was already configured for Hadoop)Įxport SPARK_HOME="/usr/local/spark-3.1.2-bin-hadoop3.2"Įxport SPARK_CONF_DIR="$:/home2/anaconda3/bin"Įxport PYSPARK_PYTHON=/home2/anaconda3/bin/python3Įxport PYSPARK_DRIVER_PYTHON_OPTS='notebook -no-browser -port=XX075'

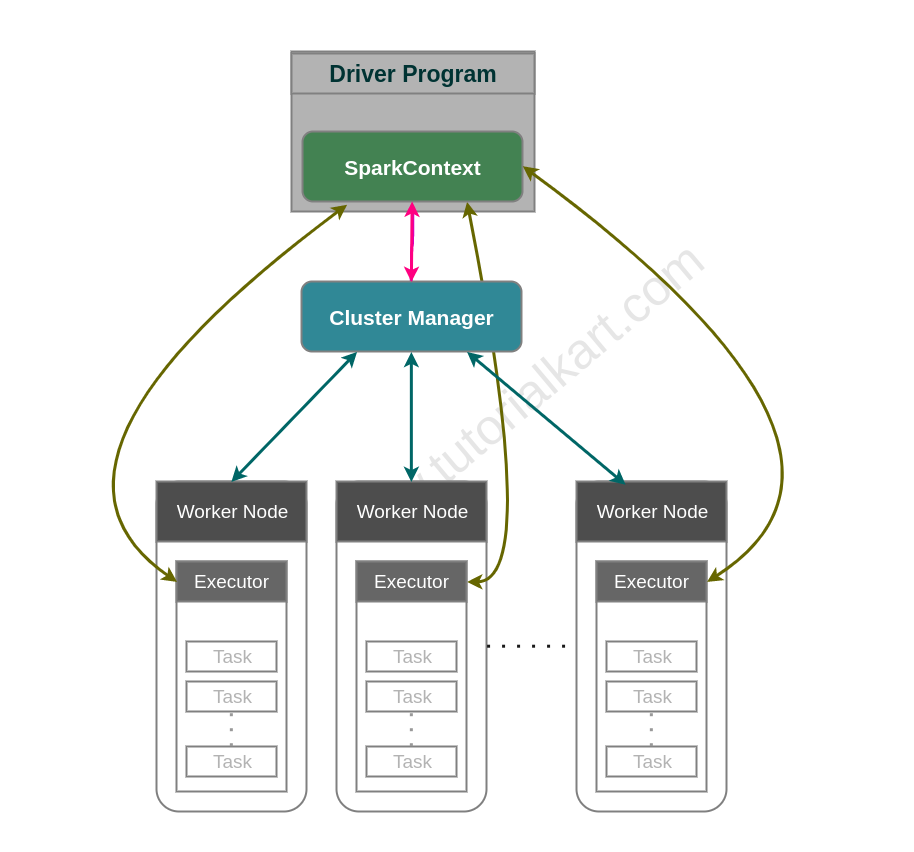

zshenv if you are a zsh user) and add the following: # Use the latest version of Java: We need to set up some environment variables for the various Spark components. We will walk through the setup here, but if you run into trouble or want to delve deeper into the configuration, use the following links as a starting point. The official documentation outlines setting up a Spark cluster. Each driver has a Spark context – you’ll see this when you start spark-shell or pyspark. This can be a machine in the cluster or your own laptop it manages the state of the job and submits tasks to be executed. To run a Spark job, you’ll need a Driver. Platform Requirements Operating system: Ubuntu 14.04 or later, we can also use other Linux flavors like CentOS, Redhat, etc. The Workers run a Spark Executor that manages Java processes (tasks). Let’s Follow the steps given below for Apache Spark Installation in Standalone Mode- i. The two main components in spark are the Cluster Manager and Workers.

Install apache spark standalone install#

If you want to run Spark on your own computer, install with your package manager (on macOS, you can use brew install apache-spark), or download the binary distribution from: Background: Spark As with Hadoop, we will set up a local configuration in your home directory. Spark 3.1.2 is available in /usr/local/spark-3.1.2-bin-hadoop3.2. For storage, we’ll use our existing HDFS cluster. While it is possible to run Spark jobs under YARN, we will configure a standalone cluster in this guide. This allows for iterative algorithms such as Machine Learning models to execute efficiently across large, distributed datasets.

Spark is a cluster computing framework that supports working sets (distributed shared memory) and has a less restrictive programming interface than MapReduce. Home Syllabus Schedule Q&A Assignments Setting up Apache Spark

0 kommentar(er)

0 kommentar(er)